Why do Machine Learning models struggle to gain traction in clinical practice and what can we do about it?

by Hailin Song, Insight DCU

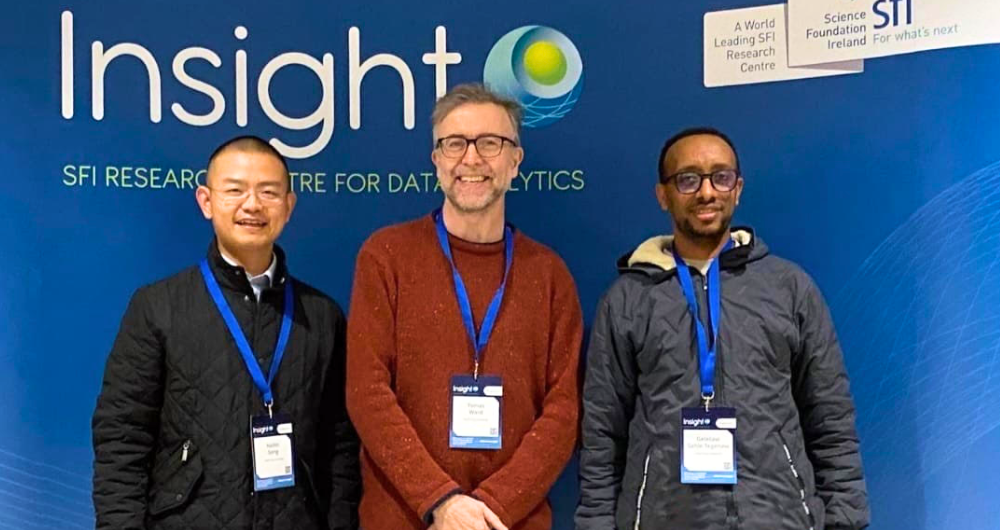

The discrepancy between laboratory performance and real-world clinical settings often arises from a combination of technical, environmental and systemic factors. As a representative use case, a team at the Insight Research Ireland Centre for Data Analytics examined neonatal EEG (Electroencephalography) seizure detection models reported in the literature. The study was conducted by Dr. Geletaw Sahle Tegenaw (right), Hailin Song (left), and Prof. Tomas Ward (centre). The study addressed the question of ‘Generalisation or Mirage?’ and investigated issues of data leakage and reported performance in neonatal EEG seizure detection ML models based on peer-reviewed studies.

These findings were recently accepted and published in Advances in Data Mining for Biomedical Informatics and Healthcare, in the journal BioData Mining (https://link.springer.com/article/10.1186/s13040-025-00516-y#Abs1). Of the 48 studies included, public datasets were used in 87.69% of papers, but only 22.9% reported code availability. While studies with low risk of data leakage are common, we found that 16.7% of papers or 31.7% of study observations exhibited a high risk of data leakage. The PLS-SEM results and chi-square test align well, supporting that rigorous data partitioning reduces leakage and that leakage adversely affects performance. Data Partitioning (Validation) strategy emerged as a strong and statistically significant predictor of data leakage (p < 0.001, R2 = 0.598), explaining 59.8% of its variance.

Data leakage has a statistically significant effect on reported model performance (p = 0.005, R2 = 0.202). Data leakage accounts for only about 20.2% of the variation in performance, indicating that other factors, such as dataset size, class balance, model architecture, and preprocessing, might play a substantial role. Overall, the evidence leans toward the generalisability of the relationship between leakage and performance, but with some caution due to variability in accuracy outcomes across studies. The findings support and underscore the need for methodological standards to mitigate leakage and improve transparency in the neonatal EEG seizure detection model. To move beyond “mirage” by minimising inflated reported performance and ensuring generalisability in EEG seizure detection models, clinical research communities and conference organisers should emphasise transparency and methodological standards; journals require explicit leakage audits and a reproducibility checklist; and funding agencies should prioritise proposals that demonstrate strong methodological robustness. The team is currently training and fine-tuning a transformer model using short EEG windows to enable real-time monitoring and detection, and to support the development of a ChatGPT-like system for EEG interpretation and the detection of neurological diseases.